Itai Cohen holding up a miniature robot. [Image: Jason Koski/Cornell University]

Scientists in the United States have shown how to combine microscopic robotics with diffractive optics to measure and manipulate visible light on the microscale (Science, doi: 10.1126/science.adr2177). Their “diffractive robots,” which rely on programmable nanomagnets and mechanical membranes just a few nanometers thick, can be made to walk, record images below the diffraction limit, steer light beams and sense exceptionally weak forces, according to the researchers. They say that these devices may have applications in medicine and electronics.

Miniaturizing joints

Tiny mechanical devices and diffractive optics have previously been brought together in the form of micro-optical microelectromechanical systems (MEMS). However, miniaturizing such systems to make microscopic robots has been impeded by the need to create nanometer-scale hinges that are both flexible and durable.

In the latest work, Itai Cohen, Paul McEuen, Francesco Monticone, Conrad Smart and colleagues at Cornell University say they have overcome this problem by using atomic layer deposition to make flexible silicon-dioxide joints just 5 nm thick. The researchers produced thousands of diffractive robots from a 100-mm2 silicon chip, with each robot consisting of a number of diffractive panels linked by the newly developed hinges, and each panel patterned with arrays of single-domain cobalt nanomagnets.

Operating the robots relies on the roughly 100-nm-long nanomagnets coming in two forms—either short and fat or long and thin. The long, thin domains are harder to flip, so a large applied magnetic field can align all the domains along the same axis, but a smaller field is only able to flip the short, fat domains. This distinction allowed the researchers to program an arbitrary pattern of north- and south-oriented magnetic domains.

Walking, record-breaking robots

Smart, who spearheaded the experimental effort, used a very simple robot to demonstrate locomotion, and he beat some records in the process. This robot consisted of two panels joined by a pair of hinges, which in the absence of magnetism lie flat on the silicon substrate. When the scientists applied a uniform magnetic field, it caused the panels to rise up and create a roof shape, with a stronger field pushing them closer to the vertical. By instead applying two sinusoidal fields at right angles to one another, the researchers were able to introduce asymmetry into this movement, causing the robot to walk either forward or backward.

Smart and his coworkers found that, although there was a limit to walking speed caused by drag, smaller robots could walk more quickly—and in so doing set new records for both size (their 5-μm length being about five times smaller than the previous minimum) and speed (managing about three body lengths per second). The researchers also showed how to guide such robots around a maze and use them to trace out letters (in this case forming the word “Cornell”), while in addition demonstrating how they can swim in a liquid. Further, they showed how to make more complex, origami-like structures, which too could be made to walk.

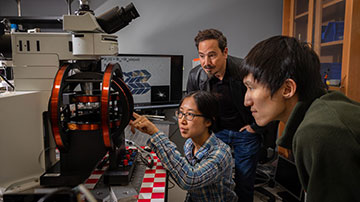

Research coauthors Melody Lim (left), Itai Cohen (center) and Zexi Liang are seen here in Cohen’s lab at Cornell University. [Image: Jason Koski/Cornell University]

Imaging, steering and detecting

Building on this work, Cohen's group then carried out subdiffractive microscopy using a robot formed from a diffraction grating and two magnetized panels. Moving the robot across an object, they captured a series of images of the object at different angles and phases, before combining the images to beat the resolution usually imposed by diffraction. Specifically, they showed that they could distinguish four metal dots spaced just 600 nm apart at the corners of a square, which under diffraction-limited imaging appeared to form a single homogeneous blob.

But imaging was not the only optical trick up the group's sleeve. McEuen led work that showed how to use the tiny robots to both steer light and detect tiny forces. In this case, the robots consisted of a diffraction grating formed from six panels (connected by hinges) that move closer together when exposed to a magnetic field. The researchers were able to shift light thanks to the fact that the central and lateral bright peaks in the grating's diffraction pattern move further apart when the panels are compressed and vice versa. Conversely, they showed how to measure an unknown external field—with a sensitivity of a mere 1 × 10-12 N—by virtue of its effect on the peaks' positions.

They claim that the research represents “a substantial advancement in the field of microscopic robots” and reckon that it could be exploited in endoscopy, cell microscopy and magnetic-field sensing. The team also envisages that swarms of such robots might be used in the near future to extend the capabilities of a microscope's lens, enabling, for example, increased resolution, lateral views of objects and localized measurements of tissue stiffness.

According to Cohen, each silicon wafer costs about US$10,000 to make. But with a million robots per wafer, that equates to just one cent per robot. “We just need to figure out how scientists want to use them, and we can start shipping them out,” he says. But he adds that certain applications still need development—putting the robots at the end of a fiber, for example, will involve working out how to marry fiber technology with the diffractive panels.