![]()

A team at the University of Oxford, the University of Exeter, and the University of Münster has prototyped a system for associative learning—famously exemplified by Pavlov’s experiments with dogs—on an optical chip. [Image: Z. Cheng]

It’s a story familiar to any high-school science student. In the early 1900s, the Russian physiologist Ivan Pavlov, by repeatedly associating the availability of food with the ringing of a bell, was able to train dogs to salivate in response to the sound—even if no food was present. The experiment is a classic example of associative learning, in which the repeated pairing of unrelated stimuli ultimately produces a learned response.

Associative learning plays a fundamental role in how human and animals interpret and navigate the world. Yet it’s been surprisingly absent as a framework for artificial-intelligence systems. Now, scientists from the United Kingdom and Germany have prototyped a system that implements Pavlovian associative learning on an optical chip, using waveguides, phase-change materials and wavelength-division multiplexing (Optica, doi: 10.1364/OPTICA.455864).

The researchers behind the new study note that the system can, for certain classes of problems, avoid some of the laborious rounds of backpropagation-based training common in more conventional deep neural networks—saving energy and computational overhead. And, while the work is still at an early stage, the researchers believe the availability of a photonic associative-learning platform could eventually add a new building block to the design toolkit for machine-learning systems.

Modeling associative learning

In a real biological system, associative learning happens when two neural signals, associated with two different stimuli—for example, the food and the bell in the case of Pavlov’s dog—arrive at the same time at a given neuron (such as a motor neuron, controlling a function such as salivation). Eventually, after multiple such simultaneous arrivals, the motor neuron learns to associate one stimulus with the other. As a result, after that point, only one of the stimuli (the food or the bell) will be needed to trigger the motor neuron into action.

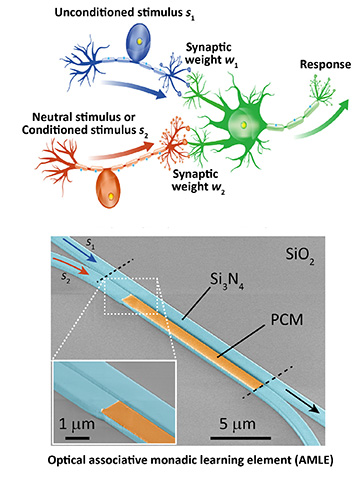

Top: In a simplified neural circuit, after stimulus s2 becomes associated with stimulus s1, both stimuli independently will elicit the same response. Bottom: The team behind the new work implemented associative learning using silicon nitride waveguides and a phase-change material (PCM). [Image: J.Y.S. Tan et al., Optica, doi: 10.1364/OPTICA.455864 (2022)]

To build a photonic system that would mimic this model of learning, the research team—including scientists at the University of Oxford and the University of Exeter, UK, and the University of Münster, Germany—broke the model down into its two main functions. The first function involves bringing the two signals together, to allow them actually to be associated in time. The second is remembering that association, and using it to condition future behavior.

Waveguides and a phase-change material

The team implemented the first function—converging and association of the signals—through two directionally coupled silicon nitride (Si3N4) waveguides, each of which carries one of the two stimuli. To record and remember the association, the team coated the lower of the two waveguides in a germanium–antimony–tellurium (GST) phase-change material, Ge2Sb2Te5, which modulates the degree of coupling between the two waveguides.

Before the associative learning begins, the GST film is in a fully crystalline state. In that state, there’s no coupling between the waveguides. Thus, a response or output occurs only when a signal comes in via the upper waveguide (analogous to the “food” in the Pavlovian case), with no output transmitted when an unaccompanied signal comes from the lower waveguide (the “bell”).

When optical stimuli arrive simultaneously in the two waveguides, however, the combined energy from the two pulses exceeds the threshold necessary to locally change the phase of the GST film, making part of the film amorphous rather than crystalline. That local change in the GST film increases the optical coupling between the two waveguides.

Eventually, after repeated associations and further amorphization of the GST film, the coupling between the upper and lower waveguides becomes strong enough that a signal sent only through the lower waveguide, without an accompanying signal from the upper one, will result in an output, causing the artificial neuron to fire. The bell rings; the dog salivates.

From single device to chip

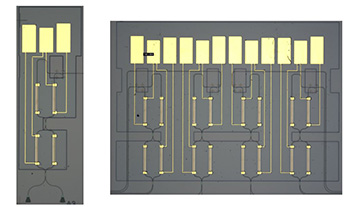

Photomicrograph of a single “associative monadic learning element” (left) and an array of four such devices on a chip (right). [Image: J.Y.S. Tan]

The team ran a variety of single-device tests on such an “associative monadic learning element” (AMLE), and confirmed that the system could be taught to associate two different, optically delivered stimuli. With that confirmation in hand, the team then packed four of the AMLEs onto a single optical chip. Cascaded Mach-Zehnder modulators (MZMs), tied to thermo-optic heaters, were used to ensure a stable optical input. The MZMs also allowed the team to take advantage of wavelength-division multiplexing, to enable the feeding of multiple, more complex inputs simultaneously into the system.

Using its four-AMLE chip as part of a simple three-layer associative-learning network, the team then put the chip to work in a simple “cat versus dog” identification test. After five rounds of training using only 10 paired cat images, the system was able distinguish cat from non-cat images. That result suggested that an artificial associative-learning system could quickly be conditioned for this simple task, without a training set of thousands of images, as might be required in some conventional artificial neural networks (ANNs).

A tool for “volume-based” problems?

The researchers behind the new work believe that this potential to reduce training costs is a key advantage of their system for certain kinds of problems. Conventional neural networks, in addition to requiring large data sets to train the system, also demand laborious, repeated rounds of so-called backpropagation, to fine-tune weightings in the multilayer neural network until the network has been tweaked to provide the right answer for an arbitrary input. The Oxford–Exeter–Münster team’s photonic associative-learning approach, in contrast, dispenses with that labor-intensive backpropagation step. Instead, it achieves learning through repeated, direct modification of the phase-change material, potentially saving computational and energy cost.

That said, the researchers stress that associative learning is a good fit only for certain kinds of AI problems. The paper’s first author, James Y.S. Tan—who did the work as part of his doctoral study at Oxford—notes that while conventional AI approaches are computationally intensive, “the benefit is that we can acquire deep features” or complex patterns using them.

Complex analysis of this type isn’t likely to be the sweet spot for associative learning. “But,” Tan says, “not all problems require acquiring such deep features,” and for these kinds of problems, the associative approach could be considerably more efficient than conventional ANNs. Such problems might be characterized as “volume based”—that is, situations in which a system does not need to analyze highly complex features in a data set, but only to rapidly establish whether a correlation exists between two separate inputs in a high-volume data stream.

“The real difference here is that if you have streams of data that are not exactly the same, but you know are correlated, the system can learn those correlations,” Oxford’s Harish Bhaskaran, who led the study, told OPN. “And then, when you get a new stream of data, it can recognize whether that is correlated or not immediately. That is really powerful.”

Bhaskaran adds that a photonic platform turned out to be a natural fit for a model of learning that, he says, is “very difficult to achieve electronically.” With photonics, he notes, “you can actually couple two waveguides across a little barrier, program a device … We thought about how we can achieve this all electronically. I'm sure there are ways, but we haven’t figured it out.”