Three terra cotta oil lamps from early Rome.

Three terra cotta oil lamps from early Rome.

Light is the physical stimulus that enables vision, a process of nearly unimaginable complexity that allows us to comprehend and respond to the world around us. But relying on natural light would leave us—literally and figuratively—in the dark much of the time. Thus, the history of lighting is a history of our learning the technical art of producing and delivering light.

A world lit only by fire

For many millennia, lighting relied on managing the combustion of fuels. The first records of fire-making appear in the Neolithic period, about 10,000 years ago. In 1991, scientists discovered a Neolithic man, dubbed “Otzi,” who was preserved in an Alpine glacier. Otzi carried on his belt a fire-making kit: flints, pyrite for striking sparks, a dry powdery fungus for tinder, and embers of cedar that had been wrapped in leaves.

Wood was the first fuel used for lighting. Homer’s poems from nearly 3,000 years ago recount his use of resinous pine torches. Resinous pitch is very flammable and luminous when burned. It was probably used in its naturally occurring state as it oozed from coniferous trees.

In Roman times, pitch was melted and smeared on bundled sticks to make more controllable torches. Later, wood treated with pitch was burned in bowls or openwork metal buckets called cressets that made the light portable. By medieval times, processing pitch from coniferous trees was a trade governed by guilds.

Evidence of oil being burned in lamps emerged more than 4,500 years ago in Ur, an ancient city in southern Mesopotamia (modern day Iraq). The earliest lighting oils were made from olives and seeds. Olive cultivation had spread throughout the Mediterranean by 3,000 years ago, and olive oil became widely used for lighting. About 3,500 years ago, sesame plants were being cultivated in Babylon and Assyria, and oil from the seed was being burned. Olive and sesame oils were burned in small lamps, sometimes with a wick formed from a rush or twisted strand of linen. Lamps of stone, terra cotta, metal, shell and other materials have been found throughout the ancient world.

Animal oils were more common in Northern Europe, where oil was obtained from fish and whales. Early in the 18th century, sperm whale oil was found to be an excellent illuminant, and whaling grew tremendously as a result. Whale oil and the lamps that burn it were common in colonial America.

Eventually, Antoine Lavoisier’s science of oxygen and combustion touched the ancient craft of burning oil. In 1780, Ami Argand invented a hollow, circular wick and burner—more luminous and efficient than previous oil lamps. Argand’s lamp was modified in the century following its introduction, and was later adapted for use with coal gas when an efficient burner was needed.

Animal grease was likely among the earliest of fuels used for lighting, with an antiquity second only to wood. Evidence for controlled fire in hearths appears about 250,000 years ago, and it is not hard to imagine early humans noticing that fat burned while their meat roasted. The Lascaux cave paintings produced in France 15,000 years ago were likely created using illumination from burning animal grease in lamps; more than 100 such lamps were found in the cave.

Tallow, which is rendered and purified animal fat, has been used for lighting since early Egyptian civilization. Though initially burned in lamps, it was used for candle-making for nearly 2,000 years. A protocandle was made in Egypt using the pithy stalk of the plentiful rush soaked in animal fat. When dried, it burned brightly. These “rushlights” were used throughout Europe, in some places until the 19th century.

Evidence of modern candles emerged from Rome in the 1st century A.D. These candles were made with a small wick and a thick, hand-formed layer of tallow. In early medieval times, candles were made by pouring and later by dipping, a method that changed little in the centuries that followed. Though wax was also used, candles were used almost exclusively for liturgical purposes, being too expensive for ordinary lighting.

In the 19th century, Michel Chevruel made chemical advances that enabled the production of stearine, a tallow derivative that made far superior candles. Using a soap-making process, chemists separated stearine from the liquid oleic acid in tallow. In 1830, Karl Reichenbach isolated a solid crystalline substance from coal that was stable and burned readily. He named it paraffin. After 1860, paraffin was distilled from petroleum and vast quantities were produced, enabling high-quality candles to be made cheaply.

Gas lighting—Without the wick

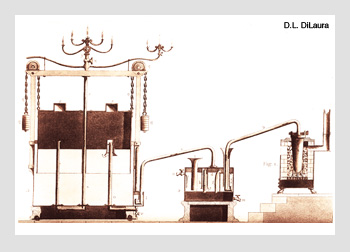

Early coal gas system. The gas generator is on the right, showing the retort holding coal and the fire used to heat it. The water scrubber in the middle shows gas being bubbled through water to remove impurities. The storage tank on the left has an inverted cylinder counter-balanced over water. This arrangement provided a more-or-less constant gas pressure at the outlet. On top of the tank are typical, very early gas burners.

Early coal gas system. The gas generator is on the right, showing the retort holding coal and the fire used to heat it. The water scrubber in the middle shows gas being bubbled through water to remove impurities. The storage tank on the left has an inverted cylinder counter-balanced over water. This arrangement provided a more-or-less constant gas pressure at the outlet. On top of the tank are typical, very early gas burners.

A complex hydrocarbon produced by distilling coal, “coal gas” revolutionized lighting in urban areas. The candle or oil lamp was replaced with the luminous flame of burning gas issuing from the end of a winding labyrinth of pipe that led from the gas factory and its storage tanks, through the streets, and into home, church, theater, store and office.

Coal is rock that burns. During the 17th century, it was widely known that heating coal produced a flammable gas. But it was not until 1792 that William Murdoch experimented with a practical system to distill and distribute coal gas for illumination. He developed early practical coal gas lighting systems between 1805 and 1813.

The entrepreneur Frederick Winsor drew on the work of the early French experimentalist Philippe Lebon. In competition with Murdoch, Winsor established in 1812 the London and Westminster Chartered Gaslight and Coke Company, the world’s first gas company. Soon, many companies arose that manufactured and distributed coal gas for major cities.

The first U.S. municipal gas plant was established in Baltimore, Md., in 1816. That same year, German mineralogist W.A. Lampadius established one of the first gasworks in Germany at Freiburg. By 1860, there would be more than 400 gas companies in the United States, 266 in Germany, and more than 900 in Great Britain.

As of 1825, gas light was a quarter of the cost of lighting by oil or candles and so it grew rapidly. Over the next 50 years, coal gas lighting became a mature industry with a highly developed technology in Europe, England and the United States.

Illuminating gas was produced by subjecting coal to considerable heat for several hours in a closed container. The heat and near absence of oxygen separated the coal into solid, liquid and gas hydrocarbons. The gas was led off and, after cooling and condensing, it was washed and scrubbed. It was then pumped to storage tanks.

Gas traveled from these tanks by underground pipes, usually following main streets, and was delivered to homes and businesses, where it was metered and finally piped to individual metal burners. By 1858, the great British gas industrialist William Sugg had patented burners made from the mineral steatite or soapstone. Soft enough to shape, soapstone was sufficiently refractory to the effects of heat and corrosion.

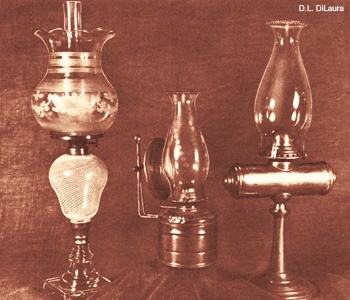

Kerosene lamps of the 19th century. They all used the kerosene burner developed by Michael Dietz in 1868. The “Dietz burner” became a worldwide standard.

Kerosene lamps of the 19th century. They all used the kerosene burner developed by Michael Dietz in 1868. The “Dietz burner” became a worldwide standard.

In 1882, Carl Auer von Welsbach of the University of Vienna found that “rare earth” elements incandesced with a brilliant light. He soaked fiber webbing in a salt solution of these elements and produced a mantle to be used over a gas burner. The webbing burned and left a skeleton of oxide ash, which, when heated, incandesced brightly. In 1891, he opened a company to sell these mantles. In the final commercial version, they were made with an impregnating solution that contained 0.991 parts thoria to 0.009 parts ceria. Improvement in gas mantle burners eventually achieved luminous efficiencies that were 10 times that of the old open flame burners. The Welsbach mantle revolutionized gas lighting and the coal gas industry.

The 19th century saw the rise and fall of gas lighting, which spread rapidly and remained unchallenged as a light source until the introduction of electric arc and incandescent lighting. Even with the great improvement provided by van Welsbach’s incandescent mantel, by 1910 gas lighting could no longer compete with electric lighting for economy, convenience and safety.

Kerosene lights in the 19th century

What gas lighting was to urban areas in the 19th century, kerosene was to rural communities. A liquid hydrocarbon discovered and named in 1854 by Abraham Gesner, kerosene was initially distilled from coal and so-called “coal oil.” It had ideal illuminating characteristics: It was not explosive but burned with a luminous, smokeless flame.

Early U.S. research and development was driven by the continuing need for an inexpensive replacement for illuminating oils in rural areas. In 1846, the Canadian chemist Abraham Gesner found that, from distilled coal came kerosene, which had an obvious practical value as an illuminant.

In 1857, Joshua Merrill was chief engineer of the U.S. Chemical Manufacturing Company. He studied Gesner’s distillation and purification process and made it more effective and appropriate for commercialization. By 1858, the company was producing 650,000 gallons of kerosene annually.

What proved to be just as important was Merrill’s introduction of “cracking”—repeatedly subjecting heavy oils to elevated temperatures that broke up the large hydrocarbon molecules into smaller ones, suitable for burning in lamps. This would become crucial to the use of petroleum.

The idea of using petroleum—Latin for “rock oil”—rather than coal as a source of illuminating oil was common by the mid-19th century. In 1854, the Pennsylvania Rock Oil Company was formed and its founders purchased property at Titusville, Pa. Benjamin Silliman Jr. of Yale University performed a photometric analysis of the petroleum from Titusville; he clearly showed the great potential of petroleum and estimated that at least 50 percent of the crude oil could be distilled into an illuminant.

Edwin Drake was hired to begin drilling. In August 1859, he reached a depth of 69 feet and found oil. His success in pumping oil from that well at Titusville marked the beginning of the oil industry and the availability of large supplies of petroleum and illuminating oils.

Arc lighting—Electricity made visible

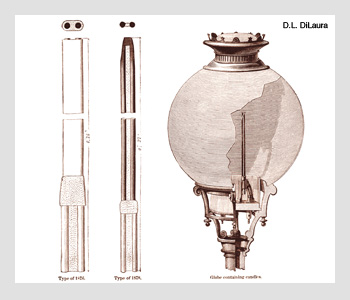

Jablochkoff electric arc light. Carbons are side-by-side, separated by plaster. Usually, more than one “candle” was placed inside a diffusing globe to reduce the glare of the arc and distribute the light uniformly.

Jablochkoff electric arc light. Carbons are side-by-side, separated by plaster. Usually, more than one “candle” was placed inside a diffusing globe to reduce the glare of the arc and distribute the light uniformly.

That light could be produced by an electric arc between rods of carbon had been known since 1800. But an arc was not practical since the source of electricity at that time was the voltaic pile. In 1831, Michael Faraday discovered electromagnetic induction and successfully produced electricity directly from magnetism. News of Faraday’s discoveries spread quickly and, by 1844, commercial electric generators were being used for electroplating. By 1860, lighthouses in England and France used arc lights powered by electric dynamo machines.

In 1867, William Siemens pointed the way to the most important of the early electric generating machines—that of Theophile Gramme. His dynamos were significantly more efficient than previous machines and had a simple construction that made them reliable and easy to maintain. They generated large amounts of electrical power and renewed efforts to improve arc lights.

All arc lights had been operated on unidirectional current and the positive and negative carbons were unequally consumed. Elaborate mechanisms had been devised to maintain a constant arc gap, but they limited the practicality of arc lighting. In 1876, Paul Jablochkoff devised a startlingly simple solution: use alternating current so that both carbons would be consumed at the same rate.

He placed the carbons parallel to each other, separated by a thin layer of plaster, so that the arc was perpendicular to the axis of the carbons. As burning continued, the plaster and the carbons were consumed, and the arc moved downward, similar to the burning of a candle. This came to be called the “Jablochkoff candle.” It was widely used in Europe, especially in France.

In 1873, Charles Brush began work on an electric generating and arc lighting system. His advances were a simple way to continuously adjust the carbon rod spacing in his arc lights and the efficiency of his dynamos. He installed his first system in 1878. In April 1879, he gave a demonstration of the potential of his system for outdoor lighting by lighting Cleveland’s Public Square. Within two years, there were Brush arc lighting systems in the streets of New York, Boston, Philadelphia, Montreal, Buffalo and San Francisco. In each case, there was a central station from which power was distributed to several arc lights.

Like gas lighting, arc lighting rose and fell in the 19th century. It found applications in streets and roadways, halls and stadiums, and large factories, but it was too bright for homes and offices. Its success made clear how large the reward would be for a practical, smaller light source—for the “subdivision of the electric light,” as it was put.

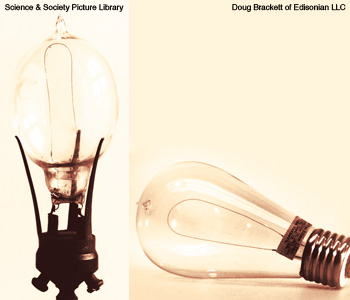

The first commercial incandescent lamps of Joseph Swan (left) and Thomas Edition (right). Swan’s lamp used a cellulose filament and spring-clip mechanism to hold the lamp and deliver electric power. Edison’s used a bamboo filament and a screw base. Edison’s base became a worldwide standard.

The first commercial incandescent lamps of Joseph Swan (left) and Thomas Edition (right). Swan’s lamp used a cellulose filament and spring-clip mechanism to hold the lamp and deliver electric power. Edison’s used a bamboo filament and a screw base. Edison’s base became a worldwide standard.

Electric incandescent lighting

Early work on incandescent lamps dates from about 1840. For the next 30 years, these lamps used the same general technology: an illuminant of platinum or carbon in a vessel to control the atmosphere. The first lamp based on incandescent platinum appeared in 1840, and incandescent carbon was used in 1845. To make a practical lamp, scientists had to learn how to manage the properties of carbon and platinum at temperatures of incandescence.

The British inventor Joseph Swan worked on incandescent lamp technology for 20 years, beginning in 1860, when he brought a carbonized piece of cardboard to incandescence electrically in the vacuum of a bell jar. By October 1880, he perfected a method for making very thin, high-resistance, uniform carbonized illuminants from cellulose. Lamps based on his work were made in 1881.

Meanwhile, Thomas Edison was also at work on an incandescent lamp. The key advance made in 1879-1880 was the recognition that a successful incandescent lamp would need to have a high-resistance illuminant and must operate in a vacuum deeper than that which was routinely attained at the time. In October 1879, Edison built and tested such a lamp. This first lamp had a “filament”—a word Edison first used—cut from pasteboard and carbonized to white-heat. After much experimentation, Edison settled on filaments made from bamboo for the commercial version of his lamp.

By 1881, Edison’s company was manufacturing complete systems consisting of a dynamo, wiring, switches, sockets and lamps. Such a system could be used to light factories, large department stores and the homes of the wealthy. By the following year, more than 150 of these plants had been sold, powering more than 30,000 incandescent lamps.

Between 1880 and 1920, incandescent lamps were significantly improved by new technologies that made them more efficacious. The earliest lamps had efficacies of 1.7 lumens/watt. In 1920, incandescent lamps had efficacies near 15 lumens/watt. The metallurgical advances that produced tungsten wire, glass and ceramic technology, and developments in mechanical and chemical vacuum pumping, are among the most important.

Discharge lamps—Light from atoms

The luminous electric discharge of low-pressure mercury had been known about since early in the 18th century. The first application to lighting was the introduction of mercury into the chamber of an arc light. This lamp, and others like it, produced the characteristic blue-green light of the low pressure mercury discharge. By 1890, mercury lamps were available that had efficacies of between 15 and 20 lumens/watt. Though unsuitable for normal interior illumination, they were used in industrial and photographic applications.

General Electric Company in England developed a new hard, aluminosilicate glass for use with high pressure, high temperature mercury discharges that produced a much whiter light. In 1932, GEC announced its new high pressure mercury discharge lamp, which exhibited an efficacy of 36 lumens/watt. At about the same time, General Electric in America perfected the fused quartz production process. Quartz arc tubes for high pressure mercury discharge lamps permitted pressures to reach 10 atmospheres, which significantly increased efficacy and broadened the spectrum of the emitted light.

Phosphorescence was first recorded in the West in 1603. However, the first extensive study of this phenomenon was not conducted until Edmond Becquerel researched it in the mid-19th century. He investigated the fluorescence and phosphorescence of many minerals, observing their behavior under various types of excitatory radiation. He showed that the ultraviolet component of sunlight excited many phosphorescing minerals. Knowing that a vacuum electric discharge was faintly blue led him to assume that the discharge produced UV radiation and would excite fluorescence and phosphorescence, which he later verified. On this basis he built proto-fluorescent lamps.

Edmund Germer and his German coworkers produced working versions of fluorescent lamps in the late 1920s. Germer’s initial objective was to devise a source of UV light that could be operated without elaborate electrical control. By coating the interior of the bulb with UV-excited fluorescent material, Germer understood that such a lamp could also be a light source. He patented these applications in 1927.

By 1934, GEC England had produced a proof-of-concept fluorescent lamp: It produced a green light with a remarkable efficacy of 35 lumens/watt. This prompted a fluorescent lamp development project at General Electric in Cleveland. In April 1938, General Electric announced the availability of the fluorescent lamp. Early lamps were available with phosphors that produced “warm” and “cool” light, known as “white” and “daylight” lamps. New with these lamps was the need for an electrical ballast for starting and operating.

High efficacies and low cost produced a demand for the lamp that grew at an unprecedented rate, confounding early notions that that the lamp would see limited demand because of its relative complexity. But in the first 10 years, U.S. fluorescent lamp sales grew tremendously, in spite of World War II, and fluorescent lighting began to displace incandescent lighting.

Metal-halide lighting

In 1894 Charles P. Steinmetz began working at General Electric in Schenectady, N.Y. One of his research projects was to develop a lamp that used a mercury discharge augmented to make its light color more acceptable. Steinmetz experimented with the halogen elements and metals. He found that metallic salts in a mercury arc gave a wide range of colors and did not chemically attack the glass arc tube as the isolated elements would. Though patented in 1900, Steinmetz’s lamp could never be made in a practical, commercial form.

In 1959, Gilbert Reiling of the General Electric Research Laboratories picked up where Steinmetz left off. He verified that metallic salts would be stable and radiate in the long wavelengths. By 1960, Reiling had produced experimental lamps using sodium and thallium iodide with high efficacies and good light color properties. Many practical problems were solved and General Electric announced the metal-halide lamp in 1962, using it at the 1964 World’s Fair in New York.

Sodium discharge lighting

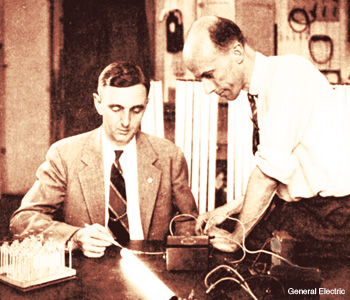

Engineers George Inman and Richard Thayer lead the team at General Electric in Cleveland, Ohio, to develop the fluorescent lamp.

Engineers George Inman and Richard Thayer lead the team at General Electric in Cleveland, Ohio, to develop the fluorescent lamp.

Experiments in the 1920s showed that a discharge of low pressure sodium could be used as a high efficacy light source. Unlike mercury, sodium at high temperatures and pressures attacks glass and quartz, and so early sodium lamps were limited to low temperatures and pressures. Although very efficacious—even early lamps provided 80 lumens/watt—their yellow, monochromatic light limited their use to area and roadway lighting.

Robert Coble, a ceramic engineer from General Electric, developed polycrystalline almina in 1957. It had a diffuse transmittance of nearly 95 percent and a very high melting point; thus, it could be used to contain sodium plasma at high pressures. Using this new material as an arc tube, General Electric began selling high-pressure sodium lamps

in 1965.

The technical advances that have taken place since the introduction of the fluorescent lamp have been every bit as great as the step from incandescent to fluorescent technologies. The task of making a fluorescent lamp small and stable proved to be formidable, but ultimately lighting engineers succeeded in bringing us the compact fluorescent lamp, a replacement for the incandescent lamp. Solid state lighting may represent the next great paradigm shift in lighting technology.

Lighting once stood out prominently against the general backdrop of technical development. Indeed, the incandescent lamp remains the symbol for the good idea. But ubiquity and reliability eventually made lighting just another part of everyday life. Yet its importance remains undiminished. It is economic, convenient and plentiful—available, in fact, at the flip of a switch.

David DiLaura is a retired professor in the department of civil, environmental and architectural engineering at the University of Colorado in Boulder, Colo., U.S.A.