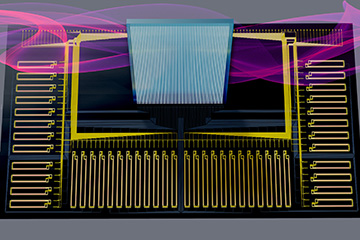

Artist”s view of smart transceiver, used to encode matrix-multiplication weights onto light beams for streaming to internet edge devices in the Netcast system. Researchers at MIT believe the system can enable the running of complex, deep-neural-net machine-learning models directly on low-power edge devices like smartphones and smart speakers rather than on power-hungry cloud servers. [Image: A. Sludds / Edited by MIT News] [Enlarge image]

From instant multilingual translations to automated voice recognition and much more, cloud-based deep neural networks (DNNs) have enabled a range of now familiar functionalities that, only a few years ago, were the stuff of science fiction. Engineers would love to export some of that AI magic from cloud-based servers to “the edge”—the actual mobile phone, smart speaker or other client device—to speed up response times and enable new applications.

But DNNs are, computationally speaking, a very heavy lift. They involve repeated matrix multiplications and the fetching and storing of billions of weight parameters for those matrices. As a result, the computationally intensive steps of neural-network computing still take place far from the user’s device, in power-hungry data centers.

A research team led by scientists at the Massachusetts Institute of Technology (MIT), USA, has now proposed a different architecture that the group says could make internet edge computing a lot more feasible (Science, DOI: 10.1126/science.abq8271). The architecture—which the group calls “Netcast”—uses photonics at critical junctures to speed up both the flow of matrix weights from the server, and computationally intense matrix multiplications on the user device. The approach, the researchers believe, “removes a fundamental bottleneck in edge computing.”

Laborious process

A typical multilayer DNN, after it’s been “trained” with source data, starts with an input vector of user data—for example, a voice query for an “intelligent agent” like Alexa or Siri. The DNN then passes that vector through a series of layered, massive matrix multiplications on a cloud server, using matrix weights developed during the DNN’s training. When the system arrives the best result, as determined by a so-called nonlinear activation function, it pipes the output back to the client device.

Straightforward as that description sounds, however, there’s a lot more going on under the proverbial hood. The laborious matrix calculations required to execute a neural-net model of any complexity might number in the billions. And matrices themselves might contain billions of weight parameters, which need to be stored and then repeatedly fetched from memory to CPUs or GPUs where the actual computation takes place. The result, in the voice-command example, can be a latency of around 200 milliseconds from user command to result—seemingly a very small number, but a noticeable lag to the user.

Alexa, autonomous cars, and drones

That lag, the authors of the new paper point out, has implications considerably farther reaching than a 0.2-second delay in your morning weather report from Alexa. For example, even that brief interval is long enough to rule out services for the real-time decisions needed in autonomous vehicles. It also makes it tougher to use machine-learning applications in remote deployments such as far-flung drones or satellites in deep space.

A better system, at least in principle, would take the input mic, camera or sensor data from the edge device and run those data through the DNN on the device itself.

A better system, at least in principle, would take the input mic, camera or sensor data from the edge device and run those data through the DNN on the device itself. That would drastically reduce the latencies that currently result from sending the input data to a distant cloud server, running the calculation and returning the result. It would also, according to the authors of the new paper, enhance security, since the communication channel between the edge device and the cloud server is ripe for abuse and snooping by hackers.

The problem is that edge devices, with chip-scale sensors, usually measure their power budgets in milliwatts—orders of magnitude below what’s needed to execute neural-net computing electronically. Trying to move DNN computing off of cloud servers and closer to the internet’s edge would thus require boosting the edge device’s size, weight and power (SWaP) requirements beyond what the current market would plausibly accept.

Lightwave advantages

The team behind the new work—led by Optica Fellow Dirk Englund at MIT, and including scientists at MIT, the MIT Lincoln Laboratory and Nokia Corp.—now proposes a system that would use photonics to get around that conundrum, and allow sophisticated neural-net computing on compact, power-efficient edge devices. Fundamentally, the group’s Netcast system is about bringing light to bear where it can pack the biggest efficiency gains: in the transfer of billions of matrix weights to the edge device, and in the execution of the DNN’s matrix multiplications themselves.

Dirk Englund. [Image: MIT EECS]

In the Netcast architecture, the DNN model’s matrix weights remain stored on a central server. A so-called smart transceiver takes those weights and converts them from the electronic to the optical domain. The transceiver leverages wavelength-division multiplexing (WDM) to encode the weightings on different wavelength components for maximum throughput and efficiency. The weightings, traveling on light beams, are then repeatedly broadcast or streamed fiber optically from the server to edge devices.

When an edge device has a piece of sensor data to interpret—such as recognizing an image from an onboard camera—it slurps in the relevant DNN model’s weights being streamed optically from the server. And here, the second photonic component of the system comes into play: a high-speed Mach-Zehnder modulator (MZM) that has been specifically tuned to perform the billions of required matrix multiplications per second.

Performing these computationally intense calculations in the optical rather than the electronic domain slashes their energy requirements, potentially enabling them to be deployed on milliwatt-scale edge devices. And streaming the weight data eliminates the otherwise deal-breaking requirement to store and retrieve those data on those same power- and memory-constrained devices.

Testing the architecture

To test out the Netcast architecture, the team built a smart transceiver, cast at a commercial CMOS foundry and consisting of 48 silicon-photonic MZMs. Each MZM is capable of modulating light at a rate of 50 Gbps—which amounts to a total bandwidth of 2.4 Tbps for the transceiver. “Our pipe is massive,” team leader Englund said in a press release accompanying the research. “It corresponds to sending a full feature-length movie over the internet every millisecond or so.” The client device used in the test included a 20-GHz broadband lithium niobate MZM to handle the matrix multiplication, along with a wavelength demultiplexer and an array of time-integrating receivers to sum the output signal.

The team used the setup to test the Netcast architecture across an 86-km fiber link between the Englund’s lab and the MIT Lincoln Lab. In a simple test of image recognition (specifically, interpreting handwritten digits), the researchers were able to achieve accuracies on the order of 98.7%.

Because of the use of a multiplexed optical signal and the massive parallelism enabled by the WDM, the system performs the image recognition at an energy cost as low as 10 femtojoules per matrix multiplication—three orders of magnitude lower than in CMOS electronics.

As important, because of the use of a multiplexed optical signal and the massive parallelism enabled by the WDM, the system performs the image recognition at an energy cost as low as 10 femtojoules per matrix multiplication—three orders of magnitude lower than in CMOS electronics. And the use of more sensitive detector arrays can hammer down the energy cost even further, to tens of attojoules per multiplication, according to the study.

Scaling Netcast up

Such energy savings, the team argues, should make the Netcast architecture scalable to the milliwatt-level power consumption required for edge devices. That could allow DNNs for image recognition, interpreting sensor data or other functions to run directly on fast, photonically enabled edge devices, rather than having to be piped to remote cloud servers for processing. And, while the client device for the team’s experiments—built using off-the-shelf telecom equipment—is shoebox-sized, coauthor Ryan Hamerly, an MIT research scientist, says that miniaturizing those components should be easily accomplished.

“We chose this this approach because it was the quickest way to do the experiment, but all of those components (modulators, detectors, WDM) can be integrated on-chip,” Hamerly told OPN in an email. He added that the team has been working with silicon-photonics foundries to construct just such an integrated version of the Netcast client. “The resulting chip,” he said, “is a few millimeters in size and should run equally fast. Thus, there is ample room to scale up the Netcast technology.”