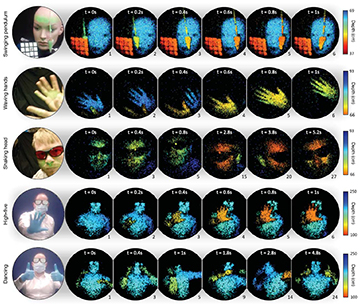

A multinational research team has demonstrated a method for capturing, at near-video rates, 3D images transmitted through an ultrathin multimode optical fiber. This picture shows mobile-phone images of the scene being captured (left column) and snapshots of the fiber image at different time steps. The color scale gives the depth of each image pixel within the scene. [Image: D. Stellinga] [Enlarge image]

By putting together techniques drawn from adaptive optics and lidar, researchers from the United Kingdom, Germany and the Czech Republic have prototyped an approach for seeing 3D images of a remote scene through a strand of ultrathin, multimode optical fiber (Science, doi: 10.1126/science.abl3771).

The setup, as demonstrated, can capture images at near-video frame rates of around 5 Hz, of objects at distances ranging from tens of millimeters to several meters from the fiber tip. The researchers hope that someday the system might find use in settings ranging from the medical clinic to industrial inspection and environmental monitoring.

Toward a thinner endoscope

A typical endoscope consists of a bundle of single-mode optical fibers, with each fiber imaging a single pixel of the remote scene. That can result, according to Optica Fellow Miles Padgett of the University of Glasgow, UK, who led the new study, in endoscope devices as large as 10 mm in diameter—“the thickness of a finger,” he says.

Endoscopy using multimode fiber (MMF) has long held interest as an alternative. A single, 0.1-mm-thick strand of such fiber can, as the name implies, support thousands of spatial optical modes. That means it has the potential to transmit high-density spatial information, in a package orders of magnitude thinner than conventional fiber-bundle endoscopes. But there’s a significant hitch—when an image of any complexity is passed through such fibers, crosstalk between the multiple modes scrambles the image, producing an unrecognizable speckle pattern at the fiber’s output end.

Fortunately, research in the past decade has increasingly found a way around that quandary. It turns out that, if the fiber remains in a fixed position, the scrambling process also remains fixed, and can be described by a mathematical operator—the so-called transmission matrix (TM). If the TM is known, it can be used to shape the input light to remove the fiber’s scrambling effect. And work earlier this year by a research group led by David Phillips at the University of Exeter, UK, demonstrated a method for calculating the TM very rapidly using compressive sensing.

Scanning the spot

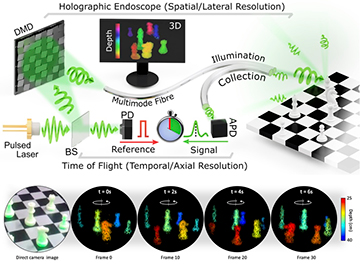

Setup of the MMF imaging system. BS, beam splitter; DMD, digital micromirror device; PD, photodiode; APD, avalanche photodiode. [Image: D. Stellinga] [Enlarge image]

In the new study in Science, Phillips has joined forces with Padgett and other researchers to extend MMF imaging to 3D video.

In the team’s setup, once the TM of the fiber has been measured, it’s used to calibrate an electronically controlled digital micromirror device (DMD) that shapes an input 532-nm, 700-ps laser pulse in such a way that the fiber’s scrambling effect is reversed. The result is that the laser pulse at the fiber’s input end yields a single, well-behaved point of light at its other end to illuminate the object to be imaged. Further, by rapidly tweaking the DMD in real time, the team can raster-scan the pulse across the field of view, at a rate of 22.7 kHz, to illuminate the entire scene.

The backscattered light from the object is then routed back through a secondary fiber and collected by an avalanche photodiode. The diode provides information on the backscattered intensity—the brightness of every pixel—which is used to build an image of the object. Further, comparing the pulse return time with that of a reference pulse from the original laser yields the time of flight of each pulse through the fiber.

From 2D to 3D

The extra time-of-flight data means that the team is able to pick out where the pulse is being returned from in three-dimensional space. That, in turn, allows the system to build not just a 2D image, but full, lidar-like 3D point clouds. The researchers demonstrated the approach with 40×40-pixel images of a mannequin, a chessboard and a moving human, at multiple scene depths and at frame rates of nearly 5 Hz.

A video of the system in action. [Credit: D. Stellinga]

The team sees a number of ways to improve the image quality beyond what this first prototype can deliver. These include the use of shorter laser pulses to improve depth resolution, and more sophisticated computational-imaging algorithms to sharpen up reconstructions and further remove background speckle.

Toward industrial and biomedical applications

In a press release accompanying the work, Phillips said that the research opens “ a window into a variety of new environments that were previously inaccessible.” He envisions that the system might someday be used, for example, to image otherwise inaccessible nooks and crannies in the interior of systems such as jet engines and nuclear reactors. In the longer term, the team hopes that the research might also spawn endoscopes for biomedicine that are less bulky and invasive than the current standard.

There’s one significant hurdle to clear, however, before these more dynamic use cases can become reality. Right now—since any change in the fiber’s position requires a recalculation of the TM used to shape the input light—the system works only if the fiber isn’t moved. The team is now investigating ways to speed up the TM measurement and system calibration, to handle situations where the fiber moves, bends and twists as it explores and images an environment.

In addition to researchers at Glasgow and Exeter, the team included scientists at the Fraunhofer Center for Applied Photonics, UK; the Leibniz Institute of Photonic Technology, Germany; and the Institute of Scientific Instruments of the CAS, Czech Republic.