Celestial AI is working on a “Photonic Fabric” that the company says can efficiently deliver optically encoded information for data-hungry AI processors to anywhere on the computer die. [Image: celestial.ai]

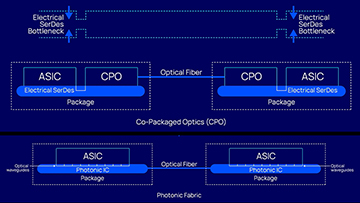

The burgeoning interest in artificial intelligence has thrown a new spotlight on optical data interconnects, which can feed data-hungry AI workloads at a fraction of the latency and energy cost of copper wire. In these schemes, the name of the game is to get the conversion between the optical and electrical domains as close to the processor as possible. Numerous companies are thus working on co-packaged optics (CPOs) that can bring data-carrying optical waveguides right to the edge of the electronic application-specific integrated circuits (ASICs) or graphical processing units (GPUs) that do AI’s heavy lifting.

One startup, though, wants take optical interconnects even deeper. The company, Celestial AI, is developing what it calls a “Photonic Fabric,” which it says is capable of delivering optically encoded data not just to the edge of the processor, as in a CPO scheme, but to any location on the computer die. Celestial AI claims that this allows the Photonic Fabric to deliver 25 times greater bandwidth at 10 times lower latency than CPO interconnect alternatives coming into the market.

At the end of June, Celestial AI completed a US$100 million Series B funding round that it’s hoping to use in the drive from development to commercialization. To learn more about the company and the technology, OPN recently talked with its cofounder and CEO, Dave Lazovsky.

Silicon photonics meets AI

Dave Lazovsky. [Image: Celestial AI]

Lazovsky—who has nearly 30 years’ experience in the semiconductor and photonics industries—says that he and his cofounder, Preet Virk, started up Celestial AI three years ago in part because of the substantial advances seen by silicon photonics over the past decade. Those advances, he observes, have thus far been driven largely by the requirements of data communications.

“We saw silicon photonics reaching a point of maturity where the timing of a company like this really begins to make a lot of sense,” he notes. “The cost structure is now at a point where the optics can be much less expensive than it used to be.”

The advances in silicon photonics are particularly relevant now, says Lazovsky, in light of the evolution of AI and machine learning toward large language models, such as the celebrated ChatGPT, and so-called recommendation engines, which Lazovsky calls “the most complex AI workload in production today.” Such workloads consume prodigious amounts of data that, in principle, can be moved from memory to processor in optical pipes, at a considerable savings in time, cost and power relative to copper-wire transmission.

Scaling the memory wall

According to Lazovsky, when he and Virk founded the company, they were eyeing not just interconnects but a photonic AI accelerator, to aid in the actual computation. But as AI evolved toward large language models and other data-intensive workloads, the game changed.

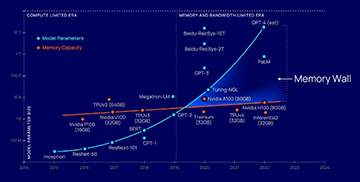

Lazovsky says that—owing to a “memory wall” constraining on-chip memory for the processors empowering AI routines—the computation industry is moving from a “compute-limited era” to a “bandwidth-limited era.” [Image: celestial.ai] [Enlarge image]

There has been, he says, a “transition from a compute-limited routine,” in which the processor performance per watt of power is the key metric, to a “bandwidth-limited regime, where interconnectivity is the gate—it’s memory bandwidth and it’s chip-to-chip I/O [input/output].” Insiders refer to this as the “memory wall,” in which the memory requirements for handling data in complex AI workloads have begun to vastly outstrip the amount of on-chip memory available to the processor.

In such a situation, Lazovsky says, the ability to move data efficiently to where it’s needed becomes the key performance parameter. And the inability of all-electronic networks to do so often leads to large amounts of “stranded memory” that AI workloads can ill afford.

The Photonic Fabric

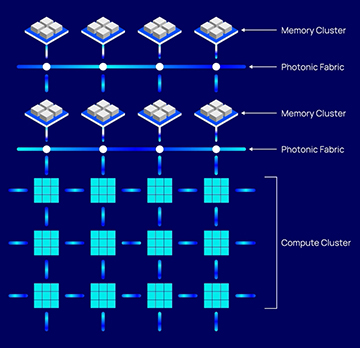

Celestial AI’s answer to this dilemma is a product it calls the Photonic Fabric. In essence, it’s an interconnect scheme that Lazovsky says is designed to bring optically encoded data directly to the precise point of computation, keeping the data in the transport-efficient optical domain for as long as possible.

The fabric Celestial AI is developing, Lazovsky argues, can overcome an inherent limitation in conventional CPO approaches to optical-electronic interconnects. Typically, these systems embody a “beachfront” architecture: Data are delivered in optical waveguides to the edge of the ASIC or GPU, and then are converted to the electrical domain via ring resonators at the end of the waveguide so the data can be processed electronically.

The beachfront limitation is forced on such CPO schemes, Lazovsky explains, because the performance and bandwidth of a ring resonator can vary significantly with temperature. “So if you put a high-temperature ASIC directly on top of it, it becomes untenable to manage.”

Beyond the beachfront

Celestial AI’s Photonic Fabric, in contrast, uses a silicon photonic modulation technology that, in Lazovsky’s words, is “about 60 times more thermally stable than a ring.” This, he says, will enable the Photonic Fabric to deliver bandwidth beyond the beachfront, and directly at the “point of compute” or data consumption in the ASIC. (He notes that the thermally stable modulator is already “in volume production for other applications”; Celestial AI is just the first company, he says, to use it in this way.)

According to Celestial AI, while CPO arrangements relying on ring resonators for optical-electrical conversion are limited to delivering optically encoded data to the edge of the electronic processor (top), the Photonic Fabric, because of its more thermally stable modulation approach, can bring data directly to the point of compute within the electronic chip. [Image: celestial.ai] [Enlarge image]

The Photonic Fabric is actually an architecture that includes not just modulators but other photonic components and sophisticated, 4-to-5-nm control electronics. The company maintains that the direct chip-to-chip packaging enabled by the fabric can deliver 25 times greater bandwidth than “any optical interconnect alternative, such as co-packaged optics”—at 10 times lower latency and power consumption.

“The beauty of our technology,” says Lazovsky, “is not only is our bandwidth density higher, but more importantly, we can deliver bandwidth anywhere we want across the system.”

Rescuing stranded memory

At the end of June 2023, Celestial AI completed a US$100 million Series B funding round, backed by IAG Capital Partners, Koch Disruptive Technologies, Temasek and an array of other investors. Lazovsky says Celestial AI, which now has around 100 employees, will use the Series B money to help it transition “from a development phase to a commercialization phase of our technology,” using a prototype product, code-named Zeus, to “demonstrate to customers the full functionality of the Photonic Fabric.”

The end goal of all of this, according to Lazovsky, is to build “high-capacity, high-bandwidth, pooled memory systems” interconnected by optics, to support increasingly complex and memory-intensive AI workloads and rescue so-called stranded memory. To Celestial AI, that looks like a significant business opportunity.

“The market size over the next five years, depending on which analyst you listen to, will be US$225 billion to US$265 billion of data center infrastructure—the compute and the optical interconnectivity that supports it,” says Lazovsky. Around 70% of that market, he adds, is “really driven by the four big hyperscalers”: Amazon, Google, Microsoft and Meta Platforms. “It’s those four companies, and the ecosystem around them, that are the focus for us,” Lazovsky says. “We’re working very closely with that ecosystem and with those companies.”