A wildfire frontline with emergency services nearby, Okanagan Valley, British Columbia, Canada. [N. Fitzhardinge / Getty Images]

A wildfire frontline with emergency services nearby, Okanagan Valley, British Columbia, Canada. [N. Fitzhardinge / Getty Images]

The 2023 spring wildfire season in Canada was the worst on record, with an area larger than the Netherlands—more than 12 million acres—burned by June. That figure exceeds the amount of land burned in the entire 2016, 2019, 2020 and 2022 seasons combined. The fires displaced tens of thousands of people, destroyed hundreds of homes and shrouded the country in toxic smoke that blew into the eastern United States. A thick orange haze enveloped New York City, whose air quality at one point ranked among the worst of any city in the world.

The incident served as a stark reminder that wildfires are becoming more frequent and intense due to climate change—a problem not limited to North America (see “A World on Fire,” OPN September 2023). For example, while bushfires have shaped the landscape in Australia for millennia, weather changes over the last century have significantly increased fire risk, according to the Intergovernmental Panel on Climate Change and the Australian Climate Council. The 2019–2020 “Black Summer” fire season was one of the worst in the country’s recorded history. And in Europe, many observers tied 2022’s record-breaking wildfires across the continent to extreme, sometimes unprecedented summer temperatures caused by global warming.

A 2022 report by the United Nations (UN) Environment Program titled “Spreading Like Wildfire: The Rising Threat of Extraordinary Landscape Fires” projects a global increase of extreme fires of up to 14% by 2030, 30% by the end of 2050 and 50% by the end of the century. The dangerous combination of increased drought, high air temperatures, lightning and strong winds equals hotter, drier and longer fire seasons.

While completely eliminating risk isn’t feasible, wildfire researchers aim to better understand extreme fire behavior in a changing climate to reduce hazards where possible—and optics plays a critical role in their work. In this feature, we offer a few snapshots of efforts by US scientists, in areas such as fuel mapping, smoke plume dynamics and early detection, to leverage optical technologies to help limit the intensity and impact of future wildfires.

The wide-ranging impact of wildfires

Landscape fires—which are low impact, often intentional, and easily controlled—are an important natural phenomenon and land-management tool. Wildfires, on the other hand, are unwanted, unplanned and largely uncontrollable. The UN defines a wildfire as “an unusual or extraordinary free-burning vegetation fire that poses significant risk to social, economic or environmental values.”

From 1900 to 2023, according to data from the University of Louvain Center for Research on the Epidemiology of Disasters, wildfires killed some 4,600 persons. That’s low compared with the death tolls from earthquakes (more than 2.3 million) and floods (more than 7 million). But wildfire smoke—a complex mixture of particulate matter and gaseous pollutants—has considerably farther-reaching, longer-term health implications. Fine particulate matter (especially < 2.5 μm, known as PM2.5) is small enough to reach deep into the lungs and even bloodstream. According to a 2021 study of wildfire-related PM2.5 pollution, annual exposure to wildfire smoke resulted in more than 30,000 deaths between 2000 and 2016 across the 43 countries included in the analysis.

Wildfires have destroyed whole towns in their path. They damage power and communication lines, water supplies, roads and railways. Annual worldwide spending on fire management continues to rise, on top of clean-up and rebuilding costs. The US federal government lays out nearly US$2 billion fighting wildfires each year, an increase of more than 170% in the last decade.

Changing fires in a warming climate

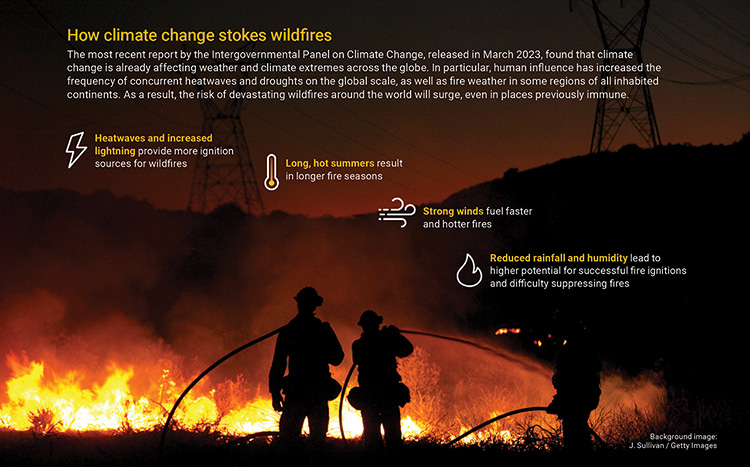

Several factors are needed for a wildfire to occur, including ignition, continuous fuels, drought conditions and “fire weather” (such as strong winds, high temperatures and low humidity). Climate change increases the chances of each happening (see “How climate change stokes wildfires,” below).

“The seven largest fires by acreage in California history all occurred within the last six years,” said Carroll Wills, senior communication advisor at California Professional Firefighters. “On that basis alone, you can quantify the degree to which recent conditions have contributed to the fire problem.”

Experts also warn of the possibility of a reinforcing feedback loop, where increased wildfire activity leads to more greenhouse gas emissions, which further accelerates climate change. In addition, wildfires in ecosystems that store large amounts of irrecoverable terrestrial carbon, such as peatlands and rainforests, will release vast quantities of carbon dioxide into the atmosphere.

Collecting and analyzing data on fire behavior can improve management of wildfire fuels, help prevent ignition and reduce gaps in fire preparedness and response.

Against this sobering backdrop, it’s easy to lose hope. But Susan Prichard, a research scientist at the University of Washington School of Environmental and Forest Sciences, USA, warns against that tendency. “Often times, people in the media talk about climate change and wildfires as kind of a doomsday scenario, and there’s a tendency to just kind of throw your hands up in the air,” Prichard said. “And as ecologists who study proactive fire management strategies, we’ve been really trying to push against that narrative.”

Part of that push involves developing approaches to fire mitigation, which will be crucial in learning how to live with fire amid the reality of climate change. Collecting and analyzing data on fire behavior can improve management of wildfire fuels, help prevent ignition and reduce gaps in fire preparedness and response. And optical techniques have become an important tool in the fight.

Mapping fuels with lidar

The most advanced models of fire behavior use computational fluid dynamics—essentially, modeling fire as fluid flowing through a landscape—and incorporate interactions among fuel, weather, topography and other contributing factors. The most challenging part of fire modeling is characterizing the burning vegetation.

3D Fuels Project team members Eric Rowell and Michelle Bester conducting terrestrial lidar scanning at Lubrecht Experimental Forest, MT, USA. [Courtesy of S. Prichard, University of Washington]

3D Fuels Project team members Eric Rowell and Michelle Bester conducting terrestrial lidar scanning at Lubrecht Experimental Forest, MT, USA. [Courtesy of S. Prichard, University of Washington]

Now, with the help of lidar, researchers can for the first time map fuels in 3D, leading to more accurate fuel characterization and better models. Such improved modeling should help fire managers explore effective treatment strategies, including creating fuel breaks to lessen fire severity, reducing fuel loads with prescribed fires and optimizing firefighting tactics. Better models also can enhance smoke forecasts and improve the assessment of carbon stores and fire–climate interactions.

“If it wasn’t for lidar, we wouldn’t be able to inform these next-generation models of fire behavior and smoke production, which are absolutely mission critical,” said Prichard, who has mapped forests and grasslands with lidar in the western and southeastern United States for the last four years as part of the 3D Fuels Project.

Lidar is a remote-sensing technology that actively emits laser pulses to measure distances to target objects. Based on the time delay of millions of returned pulses, a lidar system can precisely map vegetation as a dense point cloud in 3D.

Fuel mapping can use terrestrial, mobile scanning, airborne or space-based lidar. With terrestrial lidar, a scanner mounted on a tripod can capture a landscape in 360° as well as vertically. The result is a very highly resolved 3D map with accurate measurements of tree-level parameters like bole diameter at breast height, total height, crown base height and crown width.

“A problem with terrestrial lidar is that it’s subject to occlusion problems because it can’t see through the trees,” said Andrew T. Hudak, research forester at the US Department of Agriculture, Rocky Mountain Research Station. “With mobile scanning lidar, you have it on a backpack, walk around, and software stitches together the point cloud scans as you’re moving. So then you get a real-time depiction of how the trees are and the structure.”

Although it provides limited information at the tree scale or under the canopy, airborne lidar does have the advantage of rapidly describing forest structure over large areas. Parameters gathered with airborne lidar can include tree location within plots, tree height, crown dimensions and volume estimates. Typically, a plane equipped with a scanner flies over the landscape with parallel flight lines that overlap, so that it captures two view perspectives for any given location on the ground.

For example, Hudak works with airborne lidar data acquired with a Leica ALS60 sensor, an off-the-shelf scanning-mirror lidar mapping system that operates a 1064-nm laser with a 20° field of view and 50% side overlap, flying at a height of 1200 m. The system operates in “multiple pulses in air” mode, which allows the firing of a second laser pulse prior to receiving the previous pulse’s reflection, effectively doubling the pulse rate at any given altitude.

Engineers and technicians integrate the Global Ecosystem Dynamics Investigation instrument’s optical bench and box structure. [B. Lambert / GSFC]

Engineers and technicians integrate the Global Ecosystem Dynamics Investigation instrument’s optical bench and box structure. [B. Lambert / GSFC]

Space-based lidar measurements may be achieved with NASA’s Global Ecosystem Dynamics Investigation (GEDI) instrument on the International Space Station (ISS). GEDI is a full-waveform lidar instrument, optimized to provide global measures of vegetation structure, and has the highest resolution and densest sampling of any lidar ever put in orbit. It uses three Nd:YAG lasers (1064 nm) that pulse 242 times per second with a power of 10 mJ, firing short pulses of light (14 ns long) toward the Earth’s surface.

“Because the laser beam travels such a great distance, you can’t avoid beam divergence. By the time it reaches the ground, it’s about 25 meters in diameter,” said Hudak, a coinvestigator of the 3D Fuels Project. “So it’s a return of continuous energy, or a waveform, rather than a point cloud.”

From these waveforms, four types of structure information can be extracted: surface topography, canopy height metrics, canopy cover metrics and vertical structure metrics. One drawback of GEDI is that, due to the ISS’s orbital inclination, the instrument can cover only the temperate and tropical regions of Earth’s surface, between 51.6° N and 51.6° S.

The 3D Fuels Project, led by Prichard, integrates imagery from both terrestrial and airborne lidar to create precise 3D maps of the layers of canopy and understory fuels that could contribute to severe wildfire behavior. Hudak uses airborne lidar to calculate how much fuel is consumed in a wildfire, generating consumption maps that are simply the difference between pre- and postfire fuel load maps. These findings can then be used in fire behavior models to evaluate the effectiveness of fuel reduction treatments, as a result of not only alteration of surface and canopy fuels but changes in the effect of wind flow. Such models, it’s hoped, could one day provide near-real-time prediction of the behavior of fire and smoke.

Plume dynamics with Doppler lidar

Since 2013, Craig B. Clements, director of the Wildfire Interdisciplinary Research Center and Fire Weather Research Laboratory at San José State University, USA, has been chasing wildfires in a pickup truck fitted with a bevy of instruments, including a scanning Doppler lidar. He has ventured to the edge of dozens of wildfires, mostly in Northern California.

The California State University Mobile Atmospheric Profiling System deploying Doppler lidar during the Dixie Fire in 2021. [C. Clements]

The California State University Mobile Atmospheric Profiling System deploying Doppler lidar during the Dixie Fire in 2021. [C. Clements]

The California State University Mobile Atmospheric Profiling System (CSU-MAPS), a joint facility developed by San José and San Francisco State Universities, is the most advanced mobile system of its kind in the country. A key component of CSU-MAPS is a commercial 1.5-µm scanning Doppler lidar (Halo Photonics XR), an active remote-sensing technology that observes the Doppler shift of light scattered by aerosols in the air, that is used to measure smoke plumes.

“We scan the plume to understand the plume’s structure and what is happening inside, in terms of the flows and fire-induced winds,” Clements said. “And then we can see what the wind field is doing around the fire, which is really important to understand how fires evolve and the fire behavior.”

The Doppler lidar records two quantities: the attenuated backscatter coefficient and the Doppler velocity. The attenuated backscatter coefficient is sensitive to micrometer-sized aerosols—primarily to 0.5- to 2-µm particles in the case of forest fire smoke plumes. The Doppler velocities, which typically result from the motion of airborne smoke and ash particles, are used to investigate aspects of the airflow in and around the convective plumes and within the ambient convective boundary layer. In addition, CSU-MAPS includes a microwave temperature–humidity profiler, a radiosonde system for atmospheric parameters and an automated weather station.

Once a fire of interest is detected, Clements and his team load up the truck and speed toward the blaze, aiming to position themselves about 1 to 3 km from the fire front. “We always have a safety plan, an exit strategy and a safety zone where we can maintain ourselves,” he said.

The Doppler velocities are used to investigate aspects of the airflow in and around the convective plumes and within the ambient convective boundary layer.

The Wildfire Interdisciplinary Research Center is a National Science Foundation Industry–University Cooperative Research Center focused on wildfire science and management. The center has fire-, smoke-, and weather-forecasting systems based on a coupled fire–atmosphere model that operates in real time for the California Department of Forestry and Fire Protection and US Forest Service. Every six hours, supercomputers are fed with weather data, satellite fire observations, airborne infrared fire perimeters and fuel moisture to forecast future fire risk, fire progression and smoke dispersion.

Ultimately, Clements wants to be able to send his Doppler lidar data with a satellite connection directly from the truck to the center, where his colleagues will update and improve the model as the wildfire is happening. He recently received funding to create a network of mobile Doppler lidars in Northern California, made up of five systems strategically placed to monitor fire weather conditions. Next, he aims to expand the network with 14 more systems distributed across the entire state.

“If we have better observations of the fire environment through, say, a Doppler lidar network, then it will help constrain the model and provide an improved forecast,” said Clements. “The more accurate the fire model, the less community risk, and the better it is for all the sectors involved.”

Early detection with thermal imaging

Detecting fires early is crucial in their management. But satellites and fire lookouts may be unable to sense fire in its earliest stages, particularly amid clouds, vegetation or heavy smoke. In addition, satellite images often lack sufficient resolution—either spatial or temporal—to properly map a fire’s specific location, extent and environment. Wireless sensor networks, such as those that measure particulate matter in smoke, are fixed in place and therefore inherently limited in terms of coverage.

As an alternative method, Fatemeh Afghah and her colleagues foresee the use of drones equipped with RGB and thermal imaging as a valuable tool for the early detection and assessment of wildfires. “If we have lightning in a forest, we can send these drones to monitor the environment,” said Afghah, an associate professor of electrical and computer engineering at Clemson University, USA. “If there is a small fire, they can hover there and keep an eye on them to see if they will turn into something serious or not.”

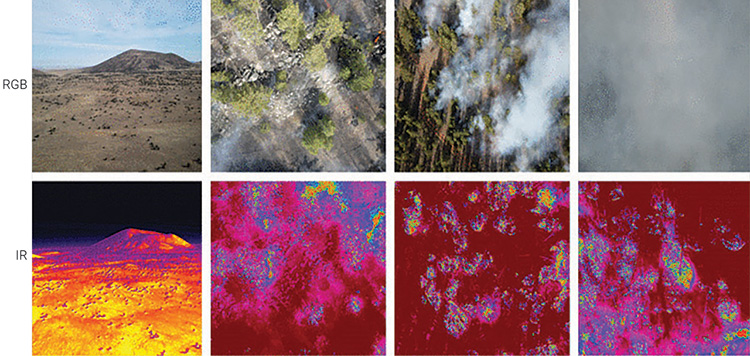

In November 2021, Afghah’s team collected RGB/IR drone videos and images during a prescribed burn in northern Arizona to test the feasibility of such an approach. The researchers employed a commercial drone (DJI Mavic 2 Enterprise Advanced) equipped with side-by-side, high-resolution thermal and visual cameras for simultaneous RGB/IR imagery.

RGB and IR frame pairs from the FLAME2 dataset. [X. Chen et al., IEEE Access 10, 121301 (2022); doi: 10.1109/ACCESS.2022.3222805]

RGB and IR frame pairs from the FLAME2 dataset. [X. Chen et al., IEEE Access 10, 121301 (2022); doi: 10.1109/ACCESS.2022.3222805]

The thermal camera contains an uncooled vanadium oxide microbolometer sensor that captures a 640×512-px array capable of characterizing temperatures from −40°C to 150°C for high-gain image capture and from −40°C to 550°C for low-gain image capture. The camera’s spectral range is from 8 to 14 μm. The 1/2” CMOS visual sensor captures passive RGB imagery with an 8000×6000-px maximum image size. Video resolution was sampled at either 3840×2160 px at 30 frames per second (fps) or 1920×1080 px at 30 fps.

Thermal imaging allowed for tracking of the fire line, embers and details of the terrain, whereas smoke obscured these features in visible-spectrum imagery. With the power of combined imagery, Afghah and her colleagues were able to make out the rough position and movement of the fire under most combustion, weather and visibility conditions.

Thermal imaging allowed for tracking of the fire line, embers and details of the terrain, whereas smoke obscured these features in visible-spectrum imagery.

The project’s goal is to collect data to train deep-learning models that can automatically classify the presence of fire or smoke in a given image. Afghah says the addition of IR imagery has given their models much higher classification accuracy, greater than 94%. They have made two RGB/IR datasets—FLAME and FLAME2, both based on prescribed burns—freely available for other researchers to use.

“Say that we have an image of a forest area, and there are a couple of flames there,” said Afghah. “Our models can say exactly where those flames are located, so you can put the attention of first responders to the area where the fire is happening.”

Ultimately, she envisions a fleet of drones based in the wilderness at wireless charging stations, powered by solar panels, that would execute a regular flight pattern on days when the chance of fire is especially high. While her team has not yet received permission to fly its drones over an actual wildfire, these initial results demonstrate the potential of low-cost, semiautonomous sensing systems for real-time wildfire detection.

“This year, we have attended multiple fires in different states—Florida, Arizona, Nevada, Oregon—to collect diversified datasets from different forest areas,” she said. “In this way, we want to reduce the impact of bias on training data, making our deep-learning models more generalizable and accurate.”

Learning to live with fire

Wildfire management will undoubtedly become more challenging due to the effects of climate change. But preventing all fires is neither possible nor desirable from an ecological standpoint. Therefore, many experts stress, humans need to learn how to coexist with wildfire.

While this feature has highlighted research in the United States, just as fire is a global problem, it is being worked on around the world. Scientists in New South Wales, Australia, are combining “smart sensing” at a range of scales—including development of the first Australian satellite designed to predict bushfires—to detect fires, assess landscape and infrastructure, inform real-time fire response and monitor air and water quality. Meanwhile, in Europe, projects funded under the EU’s Horizon 2020 research and innovation program are taking advantage of remote-sensing and artificial-intelligence technologies to create better fire ignition and prediction models.

Optics can assist in fire adaptation and resilience through fuel management, fire and smoke prediction models and early detection—but research findings only go so far and must translate into sufficient action. Fuel treatment methods, such as prescribed burning or mechanically thinning vegetation, and land-use planning will be crucial aspects of risk mitigation.

“Indigenous people have lived with a lot of fire in the western United States. Prior to settler colonialism, many landscapes were maintained with cultural burning practices, so unplanned wildfires were not as severe and didn’t produce as much smoke,” said Prichard. “We need to, as a society, think through how we move toward that, because we’re not going to put all these fires out. There’s no way.”

Meeri Kim is a freelance science journalist based in Los Angeles, CA, USA.

For references and resources, visit: optica-opn.org/link/0923-wildfire.