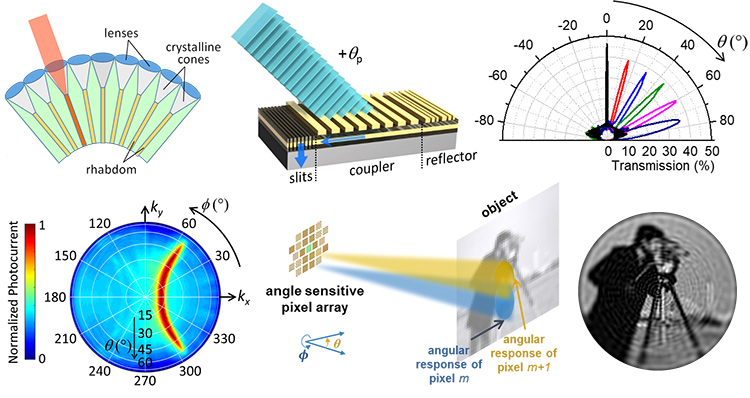

Top left: Compound eye of common arthropods. Top middle: Illustration of the devices developed in this work. Top right: Design simulation results showing the polar-angle dependence of the responsivity of different devices. Bottom left: Measured photocurrent of a representative device versus polar and azimuthal illumination angles. Bottom middle: Schematic illustration of the imaging geometry. Bottom right: Example of reconstructed image.

Top left: Compound eye of common arthropods. Top middle: Illustration of the devices developed in this work. Top right: Design simulation results showing the polar-angle dependence of the responsivity of different devices. Bottom left: Measured photocurrent of a representative device versus polar and azimuthal illumination angles. Bottom middle: Schematic illustration of the imaging geometry. Bottom right: Example of reconstructed image.

Traditional cameras used in photography and microscopy adopt a human-eye architecture; a lens is used to project an image of the object of interest onto a photodetector array. This arrangement can provide excellent spatial resolution, but because of aberration effects, it suffers from a fundamental trade-off between small size and large field of view (FOV). In nature, the solution devised by evolution to address this issue is the compound eye,1 universally found among the smallest animal species such as insects and crustaceans.

Typical compound eyes consist of a densely packed array of many imaging elements pointing along different directions. Unfortunately, their curved geometry, which is incompatible with standard planar semiconductor technologies, severely complicates their optoelectronic implementation. As a result, creating an artificial compound eye requires development of complex nontraditional fabrication processes,2,3 which limit its manufacturability and achievable resolution. Planar geometries involving lenslet arrays have been investigated,4 but these suffer from small FOVs (less than 70°) limited by the microlens f-number.

Recently, we have reported a novel compound-eye camera architecture—one that leverages the great design flexibility of metasurface nanophotonics and the advanced data-processing capabilities of computational imaging, to provide an ultrawide FOV greater than 150° in a planar lensless format.5 In this architecture, each pixel of a standard image sensor array is coated with a specially designed ensemble of metallic nanostructures. The ensemble transmits only light incident along a small, geometrically tunable distribution of angles. Computational imaging techniques then enable high-quality image reconstruction from the combined signals of all pixels. We designed, fabricated and characterized a set of near-infrared devices that provided directional photodetection peaked at different angles, and then demonstrated their imaging capabilities based on their angular-response maps.

By virtue of its lensless nature, this approach can provide further miniaturization and higher resolution compared with previous implementations, together with a potentially straightforward manufacturing process compatible with existing image-sensor technologies. We believe these results are significant for applications requiring extreme size miniaturization combined with ultrawide FOVs, such as chip-on-the-tip endoscopy, implantable or swallowable cameras and drone autonomous navigation. Furthermore, they highlight the tremendous opportunities offered by the synergistic combination of metasurface technology and computational imaging to enable advanced imaging functionalities.

Researchers

Leonard Kogos, Yunzhe Li, Jianing Liu, Yuyu Li, Lei Tian and Roberto Paiella, Boston University, Boston, MA, USA

References

1. M.F. Land and D.E. Nilsson. Animal Eyes (Oxford Univ. Press, 2002).

2. Y.M. Song et al. Nature 497, 95 (2013).

3. K. Zhang et al. Nat. Commun. 8, 1782 (2017).

4. J. Duparré et al. Appl. Opt. 44, 2949 (2005).

5. L. Kogos et al. Nat. Commun. 11, 1637 (2020).