Researchers at the University of Zurich fitted this drone with an “event camera” to move quickly and in low light. [Courtesy of UZH]

Researchers at the University of Zurich fitted this drone with an “event camera” to move quickly and in low light. [Courtesy of UZH]

Carnegie Mellon University’s “Chimp” was one of 23 robots to compete in the 2015 DARPA Robotics Challenge, held in California, USA. [DARPA]

Carnegie Mellon University’s “Chimp” was one of 23 robots to compete in the 2015 DARPA Robotics Challenge, held in California, USA. [DARPA]

At the final of the Defense Advanced Research Projects Agency (DARPA) Robotics Challenge, held in Pomona, California, USA, in June 2015, research groups from around the world competed in a race designed to push modern robots to the limit. Each of the 23 mechanized contestants had to carry out a series of tasks that would be needed in a disaster zone too dangerous for humans to enter. Among other things, they had to drive a car, open a door, turn a valve, walk through rubble and climb a set of stairs.

Many of the robots rose to the challenge, with three of them completing the course within the allotted hour. But in doing so, the robots also highlighted just how unskilled they remain compared to people. Excruciatingly slow, clumsy and stupid, they suffered numerous falls and crashes. Indeed, only one of the many machines to fall down—“Chimp” from Carnegie Mellon University in Pittsburgh, USA—managed to get back up again.

Robots are increasingly being designed for applications that call for autonomy and adaptability, beyond the safe and predictable confines of repetitive assembly line tasks.

The problems encountered at the DARPA event reflect the challenges faced by roboticists more broadly. Robots are increasingly being designed for applications that call for autonomy and adaptability, beyond the safe and predictable confines of repetitive assembly-line tasks. From machines that move elderly people around care homes and stock-taking devices that roam busy supermarkets to self-driving cars and aerial drones, these robots must navigate complex environments and interact with the humans that populate them.

A robot from San Francisco-based Bossa Nova moves around supermarkets, using lidar and cameras to record stock levels while avoiding shoppers. [Bossa Nova]

A robot from San Francisco-based Bossa Nova moves around supermarkets, using lidar and cameras to record stock levels while avoiding shoppers. [Bossa Nova]

Doing so safely and efficiently in the future will rely less on improving robotic motion than on improving the way robots acquire and process the sensory data used to guide that motion, argues David Dechow, a machine vision expert at industrial robotics manufacturer FANUC America. Engineers, he reckons, have pretty much “solved the kinematic issues, so that a robot can move around without bumping into too many things.” But technology that will allow intuitive gripping and proper object recognition, he says, “is still a long way off.”

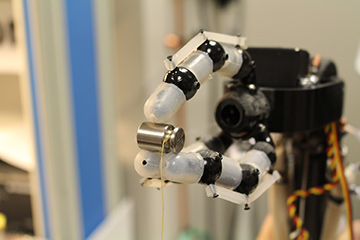

Yong-Lae Park, now at Seoul National University in South Korea, built a robotic hand that uses fiber Bragg grating sensors to improve dexterity. [Kevin Low]

Yong-Lae Park, now at Seoul National University in South Korea, built a robotic hand that uses fiber Bragg grating sensors to improve dexterity. [Kevin Low]

Yong-Lae Park, a mechanical engineer at Seoul National University in South Korea, agrees that better sensors will be an essential part of tomorrow’s robots. Park is one of many researchers today using optics and photonics technology to improve robots’ awareness of the world around them—in his case, by employing fiber sensors to make humanoids more dextrous. “For robots to be part of our daily lives, we need to have a lot of physical interactions with them,” he says. “If they can respond better, they can have more autonomy.”

Creatures of habit

Robots have been used in automobile factories for decades, where they do everything from painting and welding to putting raw metal into presses and assembling parts to create finished vehicles. As Dechow points out, robotic vision systems have become more and more important since they allow robots to account for small changes in the position of components on an assembly line.

Vision-guided robots carry out a series of precise movements based on the output from one or more imaging technologies, such as single cameras, stereo vision or time-of-flight sensors. Plenty of other industries use this technology, including electronics manufacturers and food producers.

Even clothing manufacturing, which historically has relied heavily on cheap human labor, looks set for an overhaul. A spin-off company from the Georgia Institute of Technology, USA, known as SoftWear has built a sewing robot that can handle soft fabrics—something that robots typically find hard to do—by using a specialized high-speed camera to keep track of individual threads.

Despite advances, however, industrial machines remain far from the science-fiction idea of robots. Often comprising individual robotic arms, today’s robots carry out specific—albeit increasingly sophisticated—tasks and are rooted to a particular spot on the factory floor. Beyond assembly lines, public and private research organizations have made a wide variety of humanoid robots for different purposes (Honda’s running, hopping, soccer-playing Asimo being one example), but these still have limited capabilities.

[Hanh Le]

[Hanh Le]

Cutting-edge optics

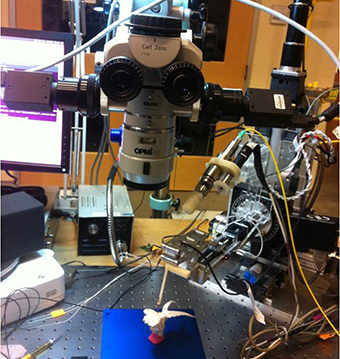

Many surgical robots, such as da Vinci, made by Intuitive Surgical in California, USA, are operated by a surgeon sitting at a console equipped with a monitor and joysticks. This setup allows the surgeon to carry out operations more steadily and precisely than they could do unaided. But Jin Kang and his colleagues at Johns Hopkins University, USA, are instead working to make surgical robots fully autonomous and, to that end, are developing a 3-D imaging system using optical coherence tomography (OCT).

The system is based on laser scanning, explains Kang, but unlike lidar, which has a long range but low resolution, this technique yields short-range, high-resolution (moving) images. It relies on the very short coherence length of broadband laser light, and involves measuring interference spectra at high speed within the area being imaged to generate a 3-D scene of the tool tip and object of the surgery. In practice, generating high-resolution 3-D video of the surgical scene in real time is challenging, says Kang, and requires both high-speed OCT and advanced image processing.

The technique is being developed to look inside the cornea and retina to improve eye surgery. It is also being used to guide cochlear implant surgery, a delicate procedure in which a tiny electrode is moved along a very small, curved channel inside the ear. The idea is to reduce damage during implantation, although, for the moment at least, the robot used during the operation is still human operated and limited to studies on animals and corpses.

Kang’s group is also working on a system for intestinal surgery that would identify two ends of an intestine that need suturing and then do the necessary stitching. The system will ultimately exploit OCT and be fully autonomous, but it currently uses structured-light imaging. It is being tested on pigs, says Kang, and should enter clinical trials within the next three years.

Dexterity is a major stumbling block. NASA’s Robonaut 2 is among the most advanced humanoids; each of its hands comprises five digits and possesses 12 degrees of freedom, which allows it to grasp and manipulate a range of tools that might come in handy in space, such as scissors, power drills and tethers. But Park says that a limited number of sensors in each hand means that the robot is likely to perform best when carrying out pre-defined tasks requiring known, rather than improvised, movements. Whereas a single human fingertip contains thousands of sensors, Robonaut 2 has just 42 in its hand and wrist.

As Park explains, a paucity of sensors creates problems in two main ways. One is being unable to measure the force exerted by an object when grasping it. If, for example, a robot tries to pick up an egg, it will crush the egg if it applies too much force but will drop it if it applies too little.

The second impediment is a lack of touch—the inability to identify where finger and surface come into contact. Darwin Caldwell, director of advanced robotics at the Italian Institute of Technology (IIT) in Genoa, Italy, points out that this could create problems even when doing something as apparently straightforward as picking up a chess piece, since grasping the piece slightly off-center could cause it to spin out of control and drop. “A robot could beat a grand master given enough computational power,” he says, “but in terms of physical manipulation it would fail to do what an 18-month child could do.”

Light work

One of the biggest limitations of current (electrical) sensors is the bulky wiring needed to operate them. To try and overcome this problem, Park, previously at Carnegie Mellon, developed a prototype robotic hand with three fingers made from rigid plastic and covered with a soft silicone rubber skin. Each finger contains eight fiber Bragg grating (FBG) sensors in the plastic and six in the skin. These sensors consist of periodic variations in an optical fiber’s refractive index that reflect a particular wavelength of incoming broadband light, and that wavelength shifts when the fiber is strained, allowing measurement of force and touch.

FBG sensors are attractive because many can potentially be placed along a single fiber. However, like Robonaut 2, Park’s robotic hand currently contains just 42 sensors. He and his colleagues in Seoul are currently working on a new version that will include more fibers in the skin and hence more sensors. At the same time, they are also trying to iron out some technical problems, such as preventing the plastic from creeping after forces are applied and then removed, as well as making the skin sensors more accurate.

Because these fiber sensors are quite rigid, they don’t allow the fingers to be fully bent. However, Park and his colleagues have also made a waveguide sensor from silicone rubber coated with reflective gold that can stretch to twice its length. When the waveguide is bent or stretched, the gold cracks, with the amount of light that leaks out revealing the degree of distortion.

Robots performing well-defined tasks could proliferate over the next 10 years or so, but those able to handle unpredictable situations convincingly might take another 50.

As Park points out, all industrial robots to date have had rigid structures because they need to be precise. But, he argues, if robots are to become widespread in daily life, they need to be made from softer parts. Such “soft robots” would not only be safer, he says, but would also loosen the requirement for precision, allowing for a greater margin of error, for example, in the force used to grasp an egg.

To this end, Robert Shepherd, Huichan Zhao and colleagues at Cornell University in New York, USA, have also tested waveguides that measure strain via light loss. They built a soft prosthetic hand with five fingers, each made from rubber and containing three waveguides that are also made of rubber and clad with silicone composite of a lower refractive index. The fingers, which are filled with compressed air, can, with the assistance of a robotic arm, be scanned across a surface to reconstruct its shape and also be pressed against an object to work out how hard it is. In this way, the researchers were able to reconstruct the shape of a series of tomatoes and select the ripest of them.

When the going gets tough

Not everyone who works in robotics aims to endow machines with human-like features or capabilities. Surgical robots usually consist of several arms, attached to a base, that wield surgical instruments and cameras. To date, they have almost no brain power of their own since they rely on the intelligence and control of the surgeon conducting the operation, although that appears to be positioned to change (see box below).

Outside of the operating theater, however, emulating humans is often the name of the robot game. When it comes to responding to disasters, Caldwell points out, robots need to operate in a world designed for people. As at DARPA’s obstacle course, robots have to be able to carry out numerous tasks that don’t come naturally to them, including movements such as walking, climbing stairs, passing through narrow spaces, finding stepping stones and getting back up when they fall down. As such, many of the DARPA contestants and other robots built for disaster response rely on alternative means of getting about, such as wheels or caterpillar tracks.

Caldwell and his colleagues at IIT have developed a range of different humanoid and “animaloid” robots for going into disaster zones. As he explains, the machines are not designed to pull people out of wreckage, but to inspect the safety of buildings. The idea, he says, is to try and avoid what happened in the Basilica of Saint Francis of Assisi in Italy in 1997, when four people were killed in an earthquake while inspecting damage to the church following an earlier tremor.

A few weeks after an earthquake struck the central Italian town of Amatrice in 2016, IIT researchers sent in a humanoid robot torso on wheels to survey a bank building that had been badly damaged. The robot was controlled remotely and equipped with a number of different imaging systems, including lidar, which it used to build up a 3-D map of the building’s interior for later study by human experts.

Other groups that have sent robots to disaster zones include those led by Robin Murphy at Texas A&M University, USA, and Satoshi Tadokoro at Tohoku University, Japan. Tadokoro’s team has built a partially tele-operated robot called Quince that negotiates disaster sites using a combination of wheels and caterpillar tracks and also uses lidar to generate 3-D maps. Quince was sent inside the Fukushima nuclear power plant, following the devastating Tohoku earthquake and tsunami, to provide information on the condition of cooling systems within the heavily damaged and highly radioactive facility.

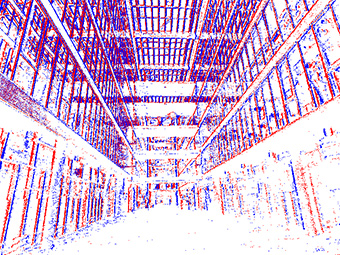

Whereas conventional cameras record intensity levels, event cameras respond to changes in brightness. [UZH]

Whereas conventional cameras record intensity levels, event cameras respond to changes in brightness. [UZH]

A bright idea

Conventional cameras create images by recording intensity levels from a scene over a finite period, which means that objects moving quickly across the image frame can appear blurred. In addition, these devices struggle to generate images when there is either little ambient light or light levels change abruptly.

Inspired by the more efficient vision system of the human eye, event cameras use an array of pixels that generate outputs only in response to a certain minimum change in ambient brightness. A pixel triggers an “event” after recording the near-instantaneous voltage of a photodiode—proportional to the logarithm of the incident light intensity—and establishing that the new voltage exceeds (or is less than) that of the previous event by some given amount.

As Davide Scaramuzza of the University of Zurich in Switzerland explains, because changes in brightness are usually caused by relative motion between the camera and the scene, event cameras naturally record movement. And because each pixel operates independently of all the others, there is no blur. Indeed, he says, event cameras can respond to changes on much shorter timescales than frame-based devices: around a microsecond rather than typically several dozen milliseconds. They are also more efficient: requiring about 10 mW rather than 1.5 W.

Event cameras do have their drawbacks, however. They generate very poor images when there is little or no motion in a scene. To show that these devices can be used by drones to fly autonomously, last year Scaramuzza’s team installed one on a quadrotor together with a conventional camera, a set of accelerometers plus gyroscopes and a cellphone processor. The researchers found that the drone could fly successfully in a circle even when there was almost no light—which it couldn’t do without an event camera—and also when it was hovering.

Drones are also potentially useful following a disaster but, to date, have always been remotely operated by humans. This limits their speed and maneuverability and can be stressful for the operator, as researchers found when using drones following a series of northern Italian earthquakes in 2012. Thus, some researchers are developing what are known as event cameras, which might allow drones to move rapidly through complex environments on their own, even at dusk or dawn (see sidebar, left).

Underwater robots, meanwhile, pose a different sort of challenge, owing to the strong attenuation of electromagnetic waves within water. Sound waves can provide wireless links over long distances but generally provide bandwidths of no more than a few thousand bits per second, while cables are cumbersome and limit the areas in which robots can operate.

To overcome these problems, a number of research groups have developed optical wireless systems that typically use blue-green wavelengths that achieve megabit-per-second speeds over ranges of a few hundred meters.

The challenges of prediction

Just how long it will take for robots to play a major role in our lives remains to be seen, but one type of robot that is gaining increasing attention is the self-driving car. Currently being tested under human supervision in a number of American cities, these machines may gain full autonomy within a few years.

However, as some fairly high-profile accidents have revealed, the technology is not perfect. For example, the driver of a Tesla car operating in autopilot was killed in Florida in 2016 when the vehicle failed to make out a large white truck against a bright sky, and a pedestrian was hit and killed by an Uber self-driving car when crossing the road in Tempe, Arizona, earlier this year. As Caldwell notes, these machines struggle to anticipate unusual actions, such as someone running out in front of a moving vehicle. What’s needed, he argues, are sensors that are able to pick out telltale signs, like a pedestrian’s body language.

Being able to merge lidars with event cameras might help, according to Pierre Cambou, an analyst at French market research company Yole Développement. Such a technological fusion, he argues, could improve vehicles’ reactions to unpredictable events by reducing, and therefore speeding up, data processing.

Cambou is optimistic that robots can improve human well-being—potentially as much as historical innovations like steam power, electricity and computing—and is confident that we can develop the “social control” needed to deal with the dangers they pose.

Park is also upbeat, arguing that computing provides a template for the expansion of robotic technology. “If you think about computers in the 1970s and 80s, they were specialized machines only for experts,” he says. “But now computers are everywhere.”

Others, however, believe that the technological hurdles remain formidable. Dechow notes that robots collaborate more with people than they used to, but, he argues, this is due more to changing safety assessments than any radical improvement in technology. “Despite what the futurists say, we are a very long way off from having ambulatory robots directly replace humans in a manufacturing plant.”

Caldwell takes a similar view. Robots performing well-defined tasks could proliferate over the next 10 years or so, he reckons, but those able to handle unpredictable situations convincingly might take another 50. “They won’t be able to react like a human will,” he says, “not for a long time.”

Edwin Cartlidge is a freelance science journalist based in Rome.

References and Resources

-

G.-J. Kruijff et al. “Rescue robots at earthquake-hit Mirandola, Italy: a field report”, IEEE Intl. Symp. Safe. Sec. Resc. Rob. (2012).

-

S. Gurbani et al. “Robot-assisted three-dimensional registration for cochlear implant surgery using a common-path swept-source optical coherence tomography probe,” J. Biomed. Opt. 19, 057004 (2014).

-

L. Jiang et al. “Fiber optically sensorized multi-fingered robotic hand,” IEEE/RSJ Int. Conf. Intel. Rob. Sys. (2015).

-

H. Zhao et al. “Optoelectronically innervated soft prosthetic hand via stretchable optical waveguides,” Sci. Rob. 1, eaai7529 (2016).

-

A. Vidal et al. “Ultimate SLAM? Combining events, images, and IMU for robust visual SLAM in HDR and high speed scenarios,” IEEE Rob. Autom. Lett. 3, 994 (2018).